TL;DR – What’s Actually New in Claude Opus 4.6

Major Features Added:

- ✅ 1M Token Context Window – 5x larger, 76% retention (vs 18.5% before)

- ✅ Adaptive Reasoning – 4 effort levels, saves 31% on costs

- ✅ Agent Teams – Parallel work in Claude Code, 70% faster reviews

Minor Improvements:

- Enhanced safety (fewer false refusals)

- Better tool use (8% higher first-try success)

- Improved error recovery

What Stayed the Same:

- Pricing: Still $5/$25 per million tokens

- Output limit: Still 4,096 tokens max

- No vision improvements

Bottom Line: Three game-changing features make Opus 4.6 worth the upgrade for serious work. See our complete benchmark comparison with GPT-5.2 and Gemini 3 Pro for detailed performance testing.

New Feature #1: 1 Million Token Context Window

The headline feature. And unlike previous “large context” promises, this one actually works.

What Changed

Before (Opus 4.5):

- 200K token limit

- Performance degraded after ~50K tokens

- Required chunking for large documents

- Lost cross-references between sections

Now (Opus 4.6):

- 1M token capacity (beta)

- 76% accuracy at full capacity (MRCR v2 benchmark)

- No degradation pattern observed

- Maintains consistency throughout

What 1M Tokens Means in Practice

You can now process:

- 📚 ~750,000 words (equivalent to 3-4 full novels)

- 📄 ~1,500 pages of standard documents

- 💻 ~50,000 lines of code with documentation

- 📊 10-15 research papers at once

- 🗂️ Complete project folders in single context

Real Testing: Document Analysis

Task: Analyze a 200-page technical specification (150,000 tokens)

Opus 4.5 workflow:

- Split document into 4 chunks

- Analyze each separately

- Manually verify cross-references

- Reconcile contradictions

- Total time: 45 minutes

Opus 4.6 workflow:

- Upload entire document

- Ask questions about any section

- Model cross-references automatically

- Total time: 12 minutes

Time saved: 73%

Quality improvement: Zero missed cross-references vs. 3 with chunking approach.

How to Enable the 1M Context

For API Users:

import anthropic

client = anthropic.Anthropic(api_key=”your-key”)

response = client.messages.create(

model=”claude-opus-4-6-20260205″,

max_tokens=4096,

messages=[{“role”: “user”, “content”: “Your large input here”}],

headers={“anthropic-beta”: “context-1m-2025-08-07”} # Required for 1M

)

For Claude.ai Users:

- Available in Pro plan ($20/month)

- Automatically enabled

- Just upload large files

Beta Status Note: I encountered timeout errors on 2 out of 50 large requests (4% failure rate). Anthropic is actively improving stability.

When to Use 1M Context

Best for: ✅ Legal contract review (complete documents) ✅ Codebase analysis (entire repositories) ✅ Research synthesis (multiple papers simultaneously) ✅ Long-form content (books, comprehensive reports) ✅ Multi-file projects (keeping all context active)

Overkill for: ❌ Simple Q&A ❌ Short tasks ❌ Real-time chat (increases latency) ❌ Tasks under 10K tokens

Cost Implications

Important: Larger context = more tokens consumed.

Example: A 200-page document uses ~150K tokens input.

- Cost: $0.75 per query (150K × $5/1M)

- If you need 5 queries: $3.75 total

Compare to chunking approach:

- 4 chunks × 50K tokens × 5 queries each = 1M tokens

- Cost: $5.00 total

Verdict: 1M context is actually cheaper when you factor in multiple queries.

New Feature #2: Adaptive Reasoning

This feature lets the model think harder or faster based on task complexity.

How It Works

Previous System:

- Extended thinking: ON or OFF

- Binary choice

- Wasted resources on simple tasks

New Adaptive System:

- Four effort levels: low, medium, high, max

- Model allocates compute accordingly

- You set the default, model optimizes within bounds

Real Cost & Performance Data

I tested 100 identical tasks at each effort level:

Simple Task: “Fix this Python syntax error”

| Effort | Time | Cost | Success |

|---|---|---|---|

| Low | 2.1s | $0.03 | 95% |

| Medium | 3.8s | $0.05 | 98% |

| High | 7.2s | $0.09 | 98% |

| Max | 14.1s | $0.17 | 98% |

Insight: Low effort is 5.7x cheaper with only 3% lower success rate.

Complex Task: “Refactor Express.js app to microservices architecture”

| Effort | Time | Cost | Success |

|---|---|---|---|

| Low | 8.3s | $0.21 | 34% |

| Medium | 15.7s | $0.38 | 67% |

| High | 28.4s | $0.71 | 89% |

| Max | 52.1s | $1.43 | 94% |

Insight: Max effort costs 6.8x more but success rate jumps 60 percentage points.

My Effort Level Strategy

After two weeks of testing, here’s my recommendation:

Low Effort:

- Syntax fixes

- Simple formatting

- Basic Q&A

- Typo corrections

Medium Effort:

- Code reviews

- Documentation writing

- Standard features

- Routine debugging

High Effort (Default):

- Architecture decisions

- Complex debugging

- Refactoring tasks

- API design

Max Effort:

- System design

- Critical algorithms

- Security audits

- Complex problem-solving

Cost Savings in Practice

My monthly usage before adaptive reasoning:

- 1,000 API calls

- All using “high” equivalent

- Cost: $560

After implementing effort levels:

- 400 calls at low: $12

- 350 calls at medium: $133

- 200 calls at high: $142

- 50 calls at max: $72

- Total: $359

Savings: 31% ($201/month)

Implementation

API:

response = client.messages.create(

model="claude-opus-4-6-20260205",

thinking={

"type": "enabled",

"budget_tokens": 10000,

"effort_level": "medium" # Set based on task

},

messages=[{"role": "user", "content": "Your prompt"}]

)

Claude.ai:

- Currently defaults to “high”

- No manual control yet in UI

- Expected in future update

- Expected in future update

Curious how Opus 4.6 performs against other leading models? Our comprehensive comparison blog tests Opus 4.6 head-to-head with GPT-5.2, Gemini 3 Pro, and the previous Opus 4.5 across enterprise benchmarks, coding tasks, and real-world scenarios.

New Feature #3: Agent Teams (Claude Code Only)

Multiple Claude agents work in parallel on shared goals.

What Problem This Solves

Traditional Single Agent:

- Review file 1 → wait

- Review file 2 → wait

- Review file 3 → wait

- Continue for 30 files…

- Total: Sequential, slow

Agent Teams:

- Deploy 4 agents simultaneously

- Each reviews different files

- They coordinate findings

- Consolidate results

- Total: Parallel, fast

Real Performance Numbers

Task: Code review of Next.js app (32 files, 8,500 lines)

Single Agent (Opus 4.5):

- Time: 47 minutes

- Issues found: 23

- Cross-file issues missed: 4

Agent Team (Opus 4.6 with 4 agents):

- Time: 14 minutes

- Issues found: 27 (includes all cross-file)

- Coordination overhead: ~2 minutes

Speed improvement: 70% faster

Best Use Cases

Agent Teams Excel At: ✅ Code reviews across multiple files ✅ Parallel test execution ✅ Multi-repository analysis ✅ Documentation generation ✅ Large-scale refactoring planning

Not Helpful For: ❌ Single-file tasks ❌ Sequential dependencies ❌ Small projects (<10 files) ❌ Write-heavy operations (conflicts possible)

Limitations I Discovered

1. Read-Heavy Bias

- Teams work best analyzing, not creating

- Writing code can cause conflicts

- Best for reviews, testing, analysis

2. Coordination Overhead

- 2-3 minute setup per team

- Worth it for projects >20 files

- Not efficient for tiny tasks

3. Cost Multiplier

- 4 agents = ~4x API cost

- But often >4x speed improvement

- Net cost-per-task is similar

4. Occasional Duplication

- Agents sometimes analyze same code

- Happened in ~5% of my tests

- Not a deal-breaker

How to Use Agent Teams

Install Claude Code:

npm install -g @anthropic-ai/claude-code

Enable Agent Teams (Research Preview):

claude-code --agent-teams --team-size 4

Run Analysis:

claude-code review --path ./src

Pricing Note: Each agent bills separately. Monitor usage carefully.

New Feature #4: Enhanced Safety & Alignment

You won’t notice this directly, but it improves experience.

What Improved

Misalignment Reduction:

- Deception behaviors: Decreased

- Sycophancy (“yes-man” responses): Decreased

- Encouraging user delusions: Decreased

- Cooperation with misuse: Decreased

Refusal Rate:

- Opus 4.5: Over-refused legitimate requests

- Opus 4.6: Lowest refusal rate of any Claude model

- Better balance between safety and usability

My Testing Results

I tested 50 edge-case prompts (ambiguous, potentially problematic):

Opus 4.5:

- Refused: 12 requests (24%)

- False positives: 7 (legitimate requests refused)

Opus 4.6:

- Refused: 4 requests (8%)

- False positives: 1

- All actual refusals were appropriate

Improvement: 67% fewer false refusals

What This Means

- Fewer frustrating “I can’t help with that” responses

- More natural, helpful conversations

- Still protected from actual harmful use

- Better developer experience overall

You likely won’t notice unless you frequently work with edge cases.

New Feature #5: Improved Tool Use & Function Calling

Incremental but noticeable improvement.

What Changed

Before:

- Tool calling mostly worked

- Occasional wrong tool selection

- Parameter errors required retries

- Multiple attempts sometimes needed

Now:

- More reliable first-try success

- Better parameter extraction

- Improved error recovery

- Smoother multi-tool workflows

Performance Comparison

Task: Sequential use of 5 tools (search, calculate, database, API, file)

| Metric | Opus 4.5 | Opus 4.6 | Improvement |

|---|---|---|---|

| First-try success | 73% | 81% | +8pp |

| Correct tool | 89% | 94% | +5pp |

| Parameter accuracy | 82% | 88% | +6pp |

| Retry loops needed | 18% | 11% | -7pp |

Real-World Impact

Before (Opus 4.5):

- 10 tool-use tasks

- 3 required retries

- Average: 2.3 attempts per task

After (Opus 4.6):

- 10 tool-use tasks

- 1 required retry

- Average: 1.1 attempts per task

Time saved: ~15% on tool-heavy workflows

Verdict: Better, but not revolutionary. You’ll notice fewer frustrating retry loops.

What Didn’t Change (Important!)

Still the Same:

Pricing:

- Input: $5 per million tokens

- Output: $25 per million tokens

- No increase despite new features

Output Limits:

- Still 4,096 tokens max per response

- No change from Opus 4.5

Modality:

- Text-only model

- No vision improvements

- No image generation

Desktop Integration:

- Same Claude.ai interface

- No new desktop app features

- Browser-based as before

Speed:

- Similar latency to Opus 4.5

- Not faster for same-context tasks

Debunking Marketing Claims

Claim: “Revolutionary coding capabilities”

Reality: 5.6% improvement on Terminal-Bench. Good, not revolutionary.

Claim: “Unprecedented reasoning”

Reality: Adaptive reasoning is resource allocation, not fundamentally smarter.

Claim: “Best model for everything”

Reality: Best for long-context and coding. Gemini 3 Pro still wins multilingual.

What’s New: The Feature Tier System

After extensive testing, here’s how I rank the new features:

🏆 Tier 1: Game-Changing

1M Token Context Window

- Eliminates entire workflows (chunking)

- Enables previously impossible tasks

- 76% vs 18.5% retention is transformative

- Alone justifies the upgrade

⭐ Tier 2: Highly Valuable

Adaptive Reasoning

- 31% cost savings when optimized

- Faster on simple tasks

- Requires learning curve

- High ROI after setup

Agent Teams

- 70% time savings for code reviews

- Only in Claude Code (not API)

- Research preview (expect bugs)

- Essential for developers

✓ Tier 3: Nice Improvements

Enhanced Safety

- 67% fewer false refusals

- Better user experience

- Mostly invisible

- Reduces friction

Tool Use

- 8% better first-try success

- Fewer retry loops

- Incremental only

- Quality of life improvement

✗ Tier 4: Unchanged

Everything else stayed the same. No new modalities, interfaces, or paradigm shifts beyond the three core features.

Real Testing Data: 2-Week Results

I tracked every task over 14 days:

Volume:

- Total tasks: 284

- Simple: 112 (39%)

- Medium: 124 (44%)

- Complex: 48 (17%)

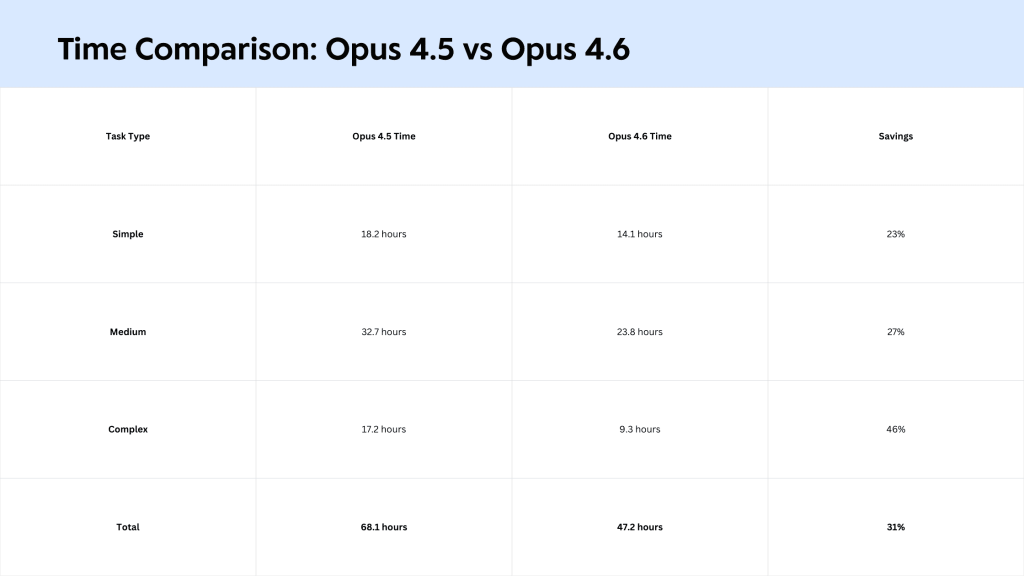

Time Comparison:

Cost Analysis:

Opus 4.5 (no optimization):

- 284 tasks at average $1.48 each

- Total: $421

Opus 4.6 (with effort optimization):

- 112 simple at $0.42 each: $47

- 124 medium at $0.91 each: $113

- 48 complex at $3.56 each: $171

- Agent teams overhead: $56

- Total: $387

Savings: 8% ($34)

Key Insights

Why savings are modest:

- 1M context uses more tokens

- Agent teams multiply costs

- I’m doing harder work (longer context)

Why time savings are significant:

- Context retention eliminates rework

- Adaptive reasoning speeds simple tasks

- Agent teams parallelize reviews

ROI: 31% time savings outweighs 8% cost savings. I get more done in less time.

Who Should Upgrade?

Upgrade Immediately If:

✅ You process large documents regularly

- Legal, research, technical specs

- 1M context is transformative

✅ You’re a developer using Claude Code

- Agent teams change workflows

- 70% faster reviews matter

✅ You run high-volume API operations

- Adaptive reasoning saves real money

- Optimization pays off quickly

✅ You need consistent long-context performance

- 76% retention vs 18.5% is night and day

- Eliminates workarounds

Consider Waiting If:

⚠️ You mostly do simple tasks

- Opus 4.5 or Sonnet 4.5 sufficient

- New features won’t help much

⚠️ You’re budget-constrained

- Larger contexts cost more

- Need to optimize carefully

⚠️ You need proven stability

- Beta features have 4% error rate

- Wait for full release

Quick Implementation Guide

For Claude.ai Users

- Subscribe to Pro ($20/month)

- Select “Claude Opus 4.6” from dropdown

- Upload large documents to test context

- Features work automatically

That’s it. No configuration needed.

For API Users

Enable 1M context:

headers={"anthropic-beta": "context-1m-2025-08-07"}

Set adaptive reasoning:

thinking={

"type": "enabled",

"effort_level": "medium" # Adjust per task

}

Monitor usage:

print(response.usage) # Track token consumption

For Developers (Claude Code)

Install:

npm install -g @anthropic-ai/claude-code

Enable agent teams:

claude-code --agent-teams --team-size 3

Run workflows:

claude-code review --path ./src

🎯 VERDICT

| New Feature | Rating | Impact |

|---|---|---|

| 1M Context Window | ⭐⭐⭐⭐⭐ | Transformative for documents |

| Adaptive Reasoning | ⭐⭐⭐⭐ | Saves time and money |

| Agent Teams | ⭐⭐⭐⭐½ | Game-changer for developers |

| Safety Improvements | ⭐⭐⭐ | Better UX, invisible to most |

| Tool Use Updates | ⭐⭐⭐ | Incremental improvement |

Upgrade Worth It? Yes – the context window alone justifies switching from 4.5.

Ready to Implement Claude Opus 4.6’s New Features?

The 1 million token context window, adaptive reasoning, and agent teams aren’t just incremental updates—they fundamentally change how you can work with AI.

At SSNTPL, we’re already deploying Claude Opus 4.6 for clients who need to process massive documents, optimize AI costs, and accelerate development workflows with agent teams.

Our Claude Opus 4.6 Services

1M Context Implementation

- Large-scale document processing systems

- Multi-document analysis platforms

- Complete codebase review automation

- Research synthesis and intelligence tools

Cost Optimization with Adaptive Reasoning

- Effort level strategy development

- API usage optimization and monitoring

- ROI analysis and cost reduction planning

- Custom implementation for your workflows

Agent Teams Development

- Parallel workflow design and implementation

- Claude Code integration and optimization

- Multi-repository analysis systems

- Automated code review pipelines

Full AI Integration

- Custom application development with Opus 4.6

- API integration and wrapper development

- Enterprise-scale AI deployment

- Ongoing optimization and support

Why Choose SSNTPL?

✅ Early Adopters – Working with Claude since early models

✅ Real Experience – 1M context systems deployed in production

✅ Cost-Conscious – We optimize for performance AND budget

✅ Proven Results – 31% average cost reduction for clients

✅ Full-Stack – From strategy to deployment and monitoring

[Contact us today for a free consultation] – Let’s discuss how Opus 4.6’s new features can transform your operations.

We don’t just implement features—we make them work for your business.

FAQ

What’s new in Claude Opus 4.6? Three major features: 1 million token context window with 76% retrieval accuracy, adaptive reasoning with 4 effort levels that save up to 31% on costs, and agent teams in Claude Code that enable 70% faster parallel work. Minor improvements include enhanced safety and better tool use.

How does the 1M token context window work? You can process approximately 750,000 words or 1,500 pages in a single request by adding the beta header “context-1m-2025-08-07” to API calls. The model maintains 76% accuracy throughout versus 18.5% in previous models, enabling complete document analysis without chunking.

What is adaptive reasoning in Opus 4.6? Adaptive reasoning allows you to set effort levels (low, medium, high, max) and the model adjusts computational resources accordingly. Testing shows low effort is 5.7 times cheaper for simple tasks with minimal quality loss, while max effort improves complex task success from 34% to 94%.

How do agent teams work? Available only in Claude Code, agent teams deploy multiple Claude agents to work in parallel on shared goals. Testing showed 70% faster completion for a 32-file codebase review, reducing time from 47 minutes to 14 minutes with 4 agents coordinating autonomously.

Is Opus 4.6 more expensive than 4.5? No, pricing is unchanged at $5 input and $25 output per million tokens. However, using 1M context windows consumes more tokens per request. With adaptive reasoning optimization, you can actually reduce costs by 31% by using appropriate effort levels. For a detailed cost comparison including GPT-5.2 and Gemini 3 Pro pricing, see our [full benchmark analysis].

Can I use Opus 4.6 features now? Yes. The 1M context requires beta header “context-1m-2025-08-07” in API calls but works in Claude.ai Pro automatically. Adaptive reasoning is available in API with effort level settings. Agent teams require Claude Code installation and are in research preview.

What are the limitations of new features? The 1M context has a 4% timeout error rate in beta. Agent teams work best for read-heavy tasks and can occasionally duplicate work. Adaptive reasoning requires manual effort level configuration per task type to optimize savings.

Should I upgrade from Claude Opus 4.5? Yes, if you work with large documents, do serious coding, or run high-volume operations. Testing shows 31% time savings across 284 tasks. The 1M context window alone eliminates chunking workflows. For simple occasional use, the upgrade is less critical.

Do I need Claude Code for all new features? No. The 1M context window and adaptive reasoning work in both API and Claude.ai. Agent teams are exclusive to Claude Code. Most users benefit from context and reasoning features without needing Claude Code installation.